By Adrija Bose

Editor’s note: The story contains references of child abuse videos.

It started with a fight between a 16-year-old girl and her 17-year-old boyfriend. She wanted to call a truce so she agreed to his terms: “Send a nude video”. Within minutes, it had reached multiple other boys’ phones in their class.

When her parents found out, they met the boy’s parents and pleaded with them to take the video down. “Even though he deleted the video, it was all over the Internet,” said Chitra Iyer, founder of Space2Grow, a consulting firm that expertises on digital safety of children. Iyer got in touch with another non-governmental organisation (NGO) Rati Foundation, which works on taking down child sex abuse materials (CSAM) from online platforms.

By the time Iyer found a way to file an FIR, the parents had backed down. “They didn’t want to face more harassment,” she told Decode.

India is one of the largest creators and consumers of CSAM content, a 2019 New York Times investigation found. The American nonprofit, National Centre for Missing & Exploited Children (NCMEC), released a report in 2019 revealing the number of online child sexual abuse material (CSAM) uploaded on the internet. A sobering 1,987,430 pieces of content were reported from India, the highest in the world.

Despite the existence of strict punishments under the Protection of Children from Sexual Offences Act (POCSO), social media platforms are rife with child sex abuse content. Instagram accounts that post sexualised images of Indian children lead viewers to Telegram channels, where people sell child sex abuse content for anywhere between Rs 40 to- Rs 5,000.

Instagram Bait To Telegram Pipeline

In the last month, Decode investigated a sample of these Telegram channels, connected to Instagram accounts, that share child sex abuse videos. This reporter procured a new SIM card and made a fake identity on Instagram and Telegram to chat with the admins of these groups. The Instagram algorithm recommended many more such accounts as we started following some.

In June, Decode texted the admin of one such Telegram group, who goes by the name Kaushal Patel. Patel said he runs multiple Telegram channels featuring children’s videos and is now looking for buyers for a few of his channels, for a rate Rs. 2,500 for each channel. We asked him where he sourced these videos from; his vague response was: “Internet”. “But what do you do with these channels, how do you make money?” Decode asked Patel. He quickly sent a link to a YouTube video that explained how to make money through Terabox.

Almost all of these videos shared on Telegram are hosted on Terabox, a file sharing site. The website, founded in Japan, notes that for every 1,000 views a user gets, they can earn $3. “You can earn $609 every week with 30,000 views per day!” the Terabox website declares. It incentivises users with additional monetary benefits for inviting new users to the site.

This is just one of the ways of making money from selling child sex abuse videos.

For the last two months, Rati Foundation, which hosts a hotline focussed on reporting CSAM, has been inundated with cases of ‘link in bio’. These refer to Instagram posts of sexualised images, or short clips, of minor girls, with captions baiting the user with promises of the full video on the link in the bio of the account or channel. Decode found out that these links lead to Telegram channels, which further lead the user to a maze of more Telegram groups and channels. While all of them have pornographic content, a large number of them showcase content featuring children.

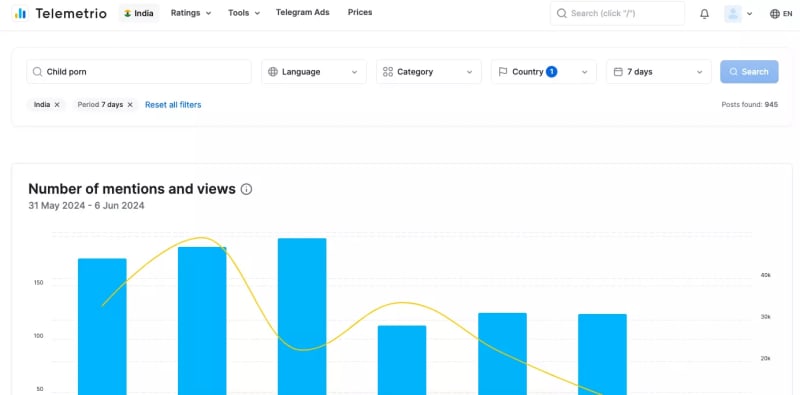

They reported 22 of these Instagram accounts in the last two months and several Telegram channels for violating child safety regulations. While they were taken down, hundreds of such Telegram groups exist. A search on Telemetrio–an online catalogue and analysis of Telegram channels–shows that for any given week, the phrase “child porn” has nearly 20K- 40K views in India alone.

An Instagram post from December 2024 showed a visibly minor girl squinting her eyes. The text in the video said the 14-year-old girl has an 18-year-old boyfriend. The video was superimposed with the text in big bold letters: “Oyo hotel me BF”, describing them having sex in a hotel room. In the comments, users asked for the full video and the account commented with “link in the bio”.

The Business Of CSAM

Earlier this year, in April, the Karnataka High Court granted bail to a 29-year-old man who was accused of selling child sex abuse videos on Telegram, for Rs 50 per video. He collected the payments from users through UPI. The court judgment noted that there were two more men involved in the business. Advocate Raghunath K, who represented the accused, told Decode that no child victim came forward to complain. “Even rape and murder accused get bail, and this was a crime that would land him in jail for only three years. So, the judge thought he should be released on bail,” the lawyer said.

The business of selling child sex abuse materials is rampant on Telegram, as Decode found through this investigation.

Also Read:How A Child Rape Survivor Became A Victim Of Disinformation On YouTube

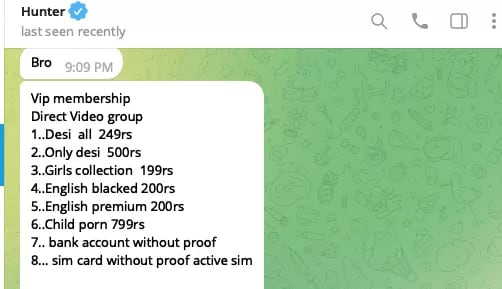

A Telegram user with the alias Hunter sent this reporter a whole range of services that he offers, ranging from “child porn” to “bank accounts without proof”. For Rs 799, he was willing to send 18,000 child sex abuse videos. For Rs 1,000 he would send over 35,000 such videos. “You can sell these videos at a higher rate,” he told the reporter while continuing to persuade us for payment.

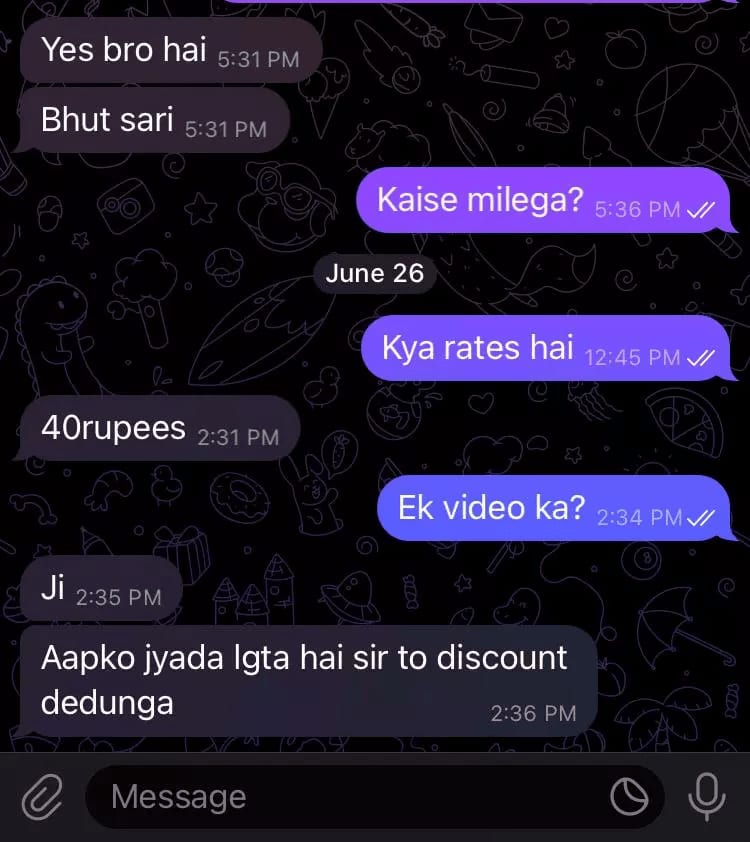

Another Telegram user said he could send child sex abuse videos for Rs 40 each. “We can give you a discount if you want,” he added.

Another Telegram user shared a rate card with the reporter which said “child rape combo package” for Rs 999. He was offering 3,000 videos for that amount.

Siddharth P, co-founder and director of Rati Foundation, said that kids whose videos are available on the Internet are used by these Instagram accounts and sexualised by cropping and manipulating the videos and images. “The problem is so huge and widespread that it’s difficult to take action against all the accounts that are sharing such images,” he said.

Also Read:Toddlers Are Finding It Hard To Speak Because Of Rising Screen Time

Siddharth’s concern is shared by the police. Brijesh Singh, former head of cybersecurity in Maharashtra, said that the sheer volume of CSAM being shared online makes it difficult for authorities to keep up with investigations and prosecutions.

He explained that the perpetrators conceal their identities and the origin of the CSAM content with technical sophistication. “The widespread use of advanced encryption techniques, such as end-to-end encrypted messaging apps, proxies and Tor networks, allows offenders to evade detection and obfuscate the digital trail, posing a significant obstacle for investigators,” he said.

He said that investigations are further complicated as CSAM can be shared across international borders, requiring coordination and information-sharing between law enforcement agencies across the world.

Currently the Additional Director General of Police with the state government at Government Of Maharashtra, Singh also said “While some platforms have robust content moderation policies and dedicated resources to address this issue, others may be slower to respond or less willing to collaborate with Indian law enforcement agencies.”

On 21 March 2024, Siddharth wrote to Meta telling them that accounts are “sexualising minors and baiting users by offering alleged CSAM links in bios.” Rati Foundation, which is one of the safety partners of Meta, reported three Instagram accounts featuring children in a sexualised manner, all for the purpose of distributing CSAM.

“The imagery used by these accounts has been sourced from existing content without the consent of the children involved. The identity of the minors is clearly visible in the content. The imagery has been manipulated through strategic cropping and text and captions to project sexual activity among children. The captions and text attempt to bait Instagram users by promising to lead them to CSAM content. The Telegram links in their bios link to public and private groups promoting pornographic content,” the letter to Meta read.

He further wrote, “These accounts are actively putting minors at risk while falsely defaming and denigrating them. The existence of the accounts also implies a community on Instagram interested in seeking and distributing CSAM content which is visible through some of the comments.”

The Tale Of A Child Victim

One such post on Instagram was of a short clip from a leaked personal video of a 17-year-old child actor from Odisha. The ‘link in bio’ led to a Telegram channel whose admin was willing to sell the full video for Rs 100. Last year, when the 17-year-old’s Instagram account, with thousands of followers, was hacked, she was desperate to retrieve her account. She reached out to many strangers online, who offered to help. “One of them said that they needed my account details to retrieve it. I believed him,” the teenager told Decode. “But he went through my private messages on Instagram and shared the video online,” she said.

Siddharth from Rati Foundation said that they keep receiving complaints about the girl’s videos. “We have removed it from platforms like Medium and Quora too,” he said. A search on Telemetrio for the girl’s name reveals that hundreds of Telegram channels are still sharing her video.

The teenager told Decode that she had filed a complaint with the police last year, and given them the account details of the person who allegedly leaked her video. “But the police did nothing, they didn’t even file an FIR.” Decode made multiple calls to the police, however, they did not explain why no FIR was filed in the case.

The Investigation Challenge

Even filed FIRs don’t necessarily lead to concrete action. In 2020, the Kerala police arrested 43 men suspected of possessing and sharing child sexual abuse material. This was after a month-long investigation, during which they had embedded themselves into Telegram groups sharing such videos. However, all the men were released on bail after two months.

Persis Sidhva, an advocate in Mumbai, told Decode that whether it is the police or the court, there is a great degree of discomfort with tech-related evidence.

In 2023, the Stanford Internet Observatory came out with a report where they found “large-scale communities” sharing paedophilia content on Instagram. This was reported by the Wall Street Journal which revealed how Instagram’s recommendation algorithm helped connect a “vast paedophile network” of sellers and buyers of illegal material.

Some of the Instagram accounts invite buyers to commission specific acts by the children. Researchers at the Stanford Internet Observatory found that “some menus include prices for videos of children harming themselves” and “imagery of the minor performing sexual acts with animals”.

“With little knowledge of safety and risks, children end up creating self-generated content,” said Chitra Iyer.

In one of the cases that Sidhva dealt with, a young girl’s videos were leaked by her ex-boyfriend. The boy created multiple Facebook accounts and messaged the girl’s friends and relatives, sharing the videos with them. The girl had only one ask, Sidhva said, “She wanted the videos to be taken down from the platforms.”

Although an FIR was registered, the videos stayed on the Internet. “The police couldn’t do anything about the content because they are not equipped to,” she said.

Anila Anand, SHO, Cybercrime in Odisha said that the challenge is in removing these videos from all corners of the Internet. “If a person shares these videos through WhatsApp, and they keep it on their phone, there’s nothing we can do about it,” she said, adding that she has witnessed several cases where CSAM content is uploaded on pornographic sites using VPN. “We can reach out to social media platforms like Facebook and Instagram to take down such videos, but we have to be quick in such cases and only when the victim is aware of who shared the videos,” the police officer said.

Also Read:Secret PINs, Disappearing Chats: Telegram Is A Nightmare For Police

That’s not always the case. Once a child sex abuse video is out, it’s shared on multiple platforms making it difficult to find where it originated from, Anand admitted.

“The process is not easy for the child,” the lawyer Sidhva pointed out, explaining how the police go through the images in front of the minor victim. “Under the POCSO Act, there is no proper protocol to ensure that the child doesn’t feel retraumatised,” she said.

Back in Odisha, the 17-year-old has gone off all social media platforms. “I barely use my phone now. I am scared,” she said.

— — —

Decode reached out to Meta and Telegram to understand if they are aware of the enormous scale of CSAM being spread using their platforms and if they are taking any action. The story will be updated if we receive a response.